This is just my notes on getting this up and running. I’d purchased a 3.2″ touchscreen to work with a Pi used on a project. However they supplied a precopiled ISO image rather than drivers and instructions so this is my notes from taking it apart to work with my own image. First up, get a keyboard on it and open a terminal (this is horrid with such little screen real estate)

sudo apt-get install xrdp

Will get the RDC server onto it then you can use remote desktop to get in there, much nicer and easier to use. user is pi and password raspberry (the defaults)

First going after config.txt, it seems there is nothing in there, how annoying.

cmdline.txt does seem to have some extra bits :

fbcon=map:1 fbcon=font:PrFont6x11

This looks like its worth investigating. This seems to be passing info to the FBTFT driver, there’s some info here. This also identifies this as the BCM2708 SPI Display controller and if you look at the docs that line is given as needing to be added. SO if we look through that driver info we should be able to find where the display is setup…

So we now need to look at /etc/modules. We might even find the touch driver in here too…

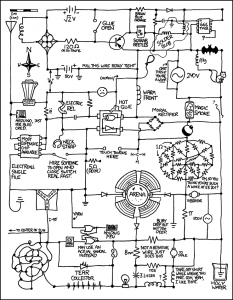

This file is VERY different to the stock file. The first, obvious changes are that SPI and I2c are enabled, I’d imagine the corresponding blacklist options have been removed too. Now we have lines for FBTFT, FLEXTFT and ADS7846_device all of which are part of the above site. This is handy as it means likeley all you need is here. In theory as the modules have been merged into the main tree you *should* have these already.

So the lines we need seem to be:

fbtft_device name=flexfb gpios=dc:22,reset:27 speed=48000000

flexfb width=320 height=240 buswidth=8 init=-1,0xCB,0x39,0x2C,0x00,0x34,0x02,-1,0xCF,0x00,0XC1,0X30,-1,0xE8,0x85,0x00,0x78,-1,0xEA,0x00,0x00,-1,0xED,0x64,0x03,0X12,0X81,-1,0xF7,0x20,-1,0xC0,0x23,-1,0xC1,0x10,-1,0xC5,0x3e,0x28,-1,0xC7,0x86,-1,0×36,0x28,-1,0x3A,0x55,-1,0xB1,0x00,0x18,-1,0xB6,0x08,0x82,0x27,-1,0xF2,0x00,-1,0×26,0x01,-1,0xE0,0x0F,0x31,0x2B,0x0C,0x0E,0x08,0x4E,0xF1,0x37,0x07,0x10,0x03,0x0E,0x09,0x00,-1,0XE1,0x00,0x0E,0x14,0x03,0x11,0x07,0x31,0xC1,0x48,0x08,0x0F,0x0C,0x31,0x36,0x0F,-1,0×11,-2,120,-1,0×29,-1,0x2c,-3

ads7846_device model=7846 cs=1 gpio_pendown=17 speed=1000000 keep_vref_on=1 swap_xy=0 pressure_max=255 x_plate_ohms=60 x_min=200 x_max=3900 y_min=200 y_max=3900

Thats quite a mouthful so there is a copy of the working modules file. Check it against yours and copy and paste whats needed in rather than do this all by hand. If you are going to edit this on a Windows machine please use notepad++ as it’ll save you a lot of pain.

Interestingly it looks like there are dfeined setup modes for other displays here such as the Adafruit one which makes adding things way easier. It may be worth looking into this as looks way easier than the above mess.

Next up we need to chek inittab…

Interestingly they havent made any changes here. This should mean that the pi’s main console is still intact. I dont have anything on my desk to plu into right now so I cant verif this but it does leave a way into the system is something breaks. There is a reccomended line but it does look like it would disable the main console. Now the quesion is, where is whatever is running on that screen coming from? The other place would be rc.local and its not from there. The next step now is to make these changes on the dev system and see what happens.

Getting X running isnt too hard now. You’ll need to move the fbturbo framebuffer somewhere else. If you dont X will just bomb out with no screens found.

mv /usr/share/X11/xorg.conf.d/99-fbturbo.conf ~

This will drop it into your home dir. You’ll want this to re-enable it later.

export FRAMEBUFFER=/dev/fb1 startx

*should* now get you an x-session. Have fin with that touch screen as its probobly way out of whack 🙂 CTRL+C and come out.

mkdir /etc/X11/xorg.conf.d

My stock Raspbian didnt have this directory, the updated did so you might not need to do this. In there create 99-calibration.conf and put the following in there…

Section “InputClass”

Identifier “calibration”

MatchProduct “ADS7846 Touchscreen”

Option “Calibration” “215 3735 3938 370”

Option “SwapAxes” “1”

EndSection

save and then try to start X again. With any luck you should have a working touchscreen. Note that while this is all pretty similar to the Adafruit unit it’s different enough that if you use their walkthrough it wont work.